I have spent over 40 years preaching the need for schools

designed for success rather than for failure. Yesterday I happened upon an

article by Nicholas

Donohue that presents convincing evidence that that is being done by

transforming high schools in the New England states. It is call student-centered

learning. Also see Andrew Cohen What School Could Be.

My attempt in 1981-1989 used a campus computer system at NWMSU, textbook, lecture, laboratory, AND

voluntary student presentations, research, and projects. This work has been

further developed in Multiple-Choice

Reborn and summarized in Knowledge

and Judgment Scoring - 2016. In 1995, Knowledge Factor patented an online confidence

based learning system (now in amplifier).

Masters,

1982, developed Rasch

partial credit scoring (PCS).

All three put the student in the position of being in charge

of learning and reporting; at all levels of thinking. They approached

evaluating an apple from the skin, as traditional multiple-choice (guess) testing

is done.

Partial Credit Scoring just polished the apple skin. The emphasis was still on

the surface, the score, at that time. Knowledge

Factor made the transition from the concrete level of thinking to understanding

(skin to core), and provided the meat between in amplifier. Nuclear power plant

operators and doctors were held to a much higher responsibility (self-judgment)

standard (far over 75%, over 90% mastery) than is customary in a traditional

high school classroom (60% for passing).

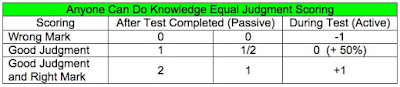

My students voted to give knowledge and judgment equal value

(1:1 or 50%:50%). Voluntary activities replaced one letter grade (10% each).

The students were then responsible for reporting what they knew or could do.

They could mix several ways of learning and reporting.

A student with a knowledge score of 50% and a quality score

of 100% would end up with about the same test score as a student who marked

every question (guessed) for a quality, quantity, and test score of 75% (with

no judgment).

These two students are very different. One is at the core of

being educated (scholar). The other is only viewing the skin (tourist). The

first one has a solid basis for self-instruction and further learning; is ready

for independent scholarship. The apple seeds germinate (raise new questions) and

produce more fruit (without the tree).

We know much less about the second student, and about what

must be “re-taught”. The apple may just be left on the tree in what is often a

vain effort to ripen it. Such is the fate of students in schools designed for

failure (grades A to F).

In extreme cases, courses are classified by difficulty or

assigned PASS/FAIL grades. My General Biology students were even “protected” so

I could not know which student was in the course for a grade or pass/fail.

Students assess the level of thinking required in a course by

asking on the first day, “Are your tests cumulative?” If so, they leave. This

is a voluntary choice to stay at the lowest levels of thinking. Memory care residents

do not have that choice.

There is a frightening parallel between creating a happy

environment for memory care residents here at Provision Living at

Columbia, and creating an academic environment (national, state, school, and

classroom) that yields a happy student course grade. Both end up at the end of

the day pretty much where they started, at the lowest levels of thinking.

Many students made the transition from memorizing nonsense

for the next test to questioning, answering, and verifying; learning for

themselves and knowing they were “right”. This is self-empowering. They started

getting better grades in all of their courses. They had experienced the joy of

scholarship, an intrinsic reward. “I do know what I know.” The independent quality

score in knowledge and judgment scoring directed their path.

Student centered learning is not new. The title is. This is

important in marketing to institutionalized education. What is new is that at

last entire high schools are now being transformed for the right reason:

student development rather than standardized test scores based on lower levels

of thinking during instruction and testing.

These students should be ready for college or other post

high school programs. They should not be the under-prepared college students we

worked with. The General Biology course was to last for only a few years; until

the high schools did all of this work. In practice, the course became

permanent. Biology did not became a required course in all high schools.

My interest in this project was to find a way to know what

each student really knew, believed, could do, and was interested in, when a new

science building was constructed in 1980 with 120 seat lecture halls. The unexpected

consequence of promoting student development, based on the independent quality and

quantity scores, was not only a bonus but appropriately needed for

under-prepared college students. Over 90% of students voluntarily switched from

guessing right answers to reporting what they actually knew and could do.

In my experience, the multiple-choice test, when administered and scored properly (quantity and quality) yields as good (if not better) an insight into student ability as many overly elaborate and expensive assessments other than actual performance. Student development (becoming comfortable using higher levels of thinking) is an added bonus.

In my experience, the multiple-choice test, when administered and scored properly (quantity and quality) yields as good (if not better) an insight into student ability as many overly elaborate and expensive assessments other than actual performance. Student development (becoming comfortable using higher levels of thinking) is an added bonus.